-

New study tracks pollution worldwide

- 8th May, 2025

- LATEST

A groundbreaking study has revealed a concerning picture of urban air quality and carbon emissions in 13,189 cities around the world. Using advanced supercomputing and geospatial modeling, researchers have carefully mapped the concentrations of fine particulate matter (PM₂.₅), nitrogen dioxide (NO₂), ozone (O₃), and fossil-fuel CO₂ emissions from 2005 to 2019. The findings are alarming: although some high-income countries have made modest reductions, the global trend shows a troubling increase of 6% in ozone levels and a 4% rise in CO₂ emissions. PM₂.₅ levels have stagnated, and NO₂ has seen only a negligible decrease of 1%.

This comprehensive analysis, led by researchers from the George Washington University, highlights the persistent nature of urban pollution. Despite some localized improvements, over half of the surveyed cities showed positive correlations among all pollutants, indicating a widespread failure to separate economic growth from environmental degradation. The study emphasizes that urban areas, which account for over 70% of global greenhouse gas emissions, are central to the climate crisis.

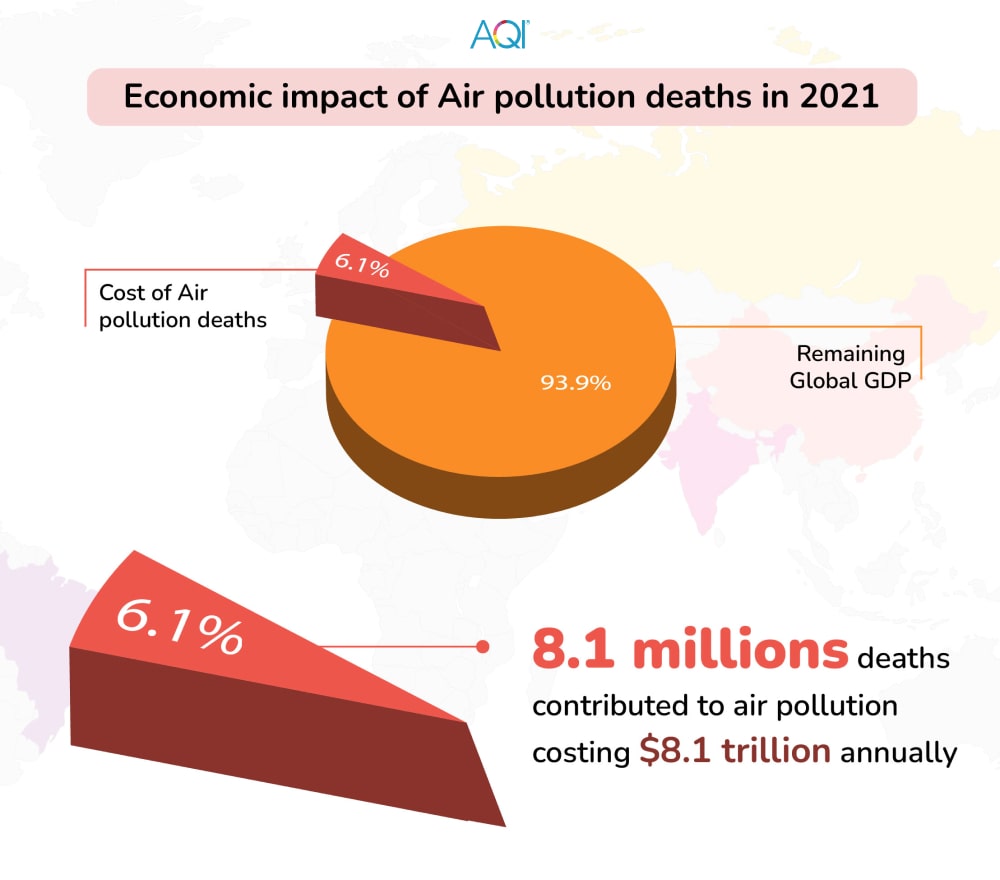

The economic consequences are equally alarming. Air pollution imposes a significant burden on global economies, with the World Bank estimating costs exceeding $8 trillion annually, over 6% of global GDP. These expenses arise from healthcare costs, reduced labor productivity, and lower agricultural yields. In the United States alone, air pollution-related damages were estimated at $790 billion in 2014, representing about 5% of the nation’s GDP.

Furthermore, the health impacts are severe. Air pollution contributes to approximately 7 million premature deaths each year, making it the second leading risk factor for death globally after high blood pressure. The study's findings indicate that without aggressive and immediate policy interventions, these numbers are likely to rise, worsening public health crises and economic instability.

While supercomputing has offered an unprecedented view of the scale of urban pollution, the data suggests a bleak future. The study serves as a call to action for policymakers and global leaders to implement stringent environmental regulations and invest in sustainable urban planning. Without decisive action, the intertwined challenges of air pollution and climate change will continue to threaten planetary and human health.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

Intel's recent developments highlight the company's ongoing struggles amid leadership changes, financial difficulties

- 1st May, 2025

- LATEST

The announcements from Intel Corporation paint a concerning picture of a tech giant facing internal and external pressures. Despite its efforts to reposition itself within the competitive semiconductor industry, Intel is encountering significant challenges that raise doubts about its future.

In the first quarter of 2025, Intel reported a net loss of $800 million, with revenues stagnating at $12.7 billion. The company's forecast for the second quarter is equally discouraging, projecting revenues between $11.2 billion and $12.4 billion, which fall short of analyst expectations. These numbers underscore Intel's ongoing difficulty regaining its footing in a market increasingly dominated by competitors such as Nvidia and TSMC.

In response to its financial troubles, Intel has implemented substantial cost-cutting measures. The company plans to reduce capital expenditures by 10%, lowering its budget from $20 billion to $18 billion, and has announced potential workforce reductions exceeding 20%. These layoffs follow a previous cut of 15,000 employees in August, indicating a concerning trend of downsizing.

Intel's leadership is also experiencing significant changes. Christoph Schell, the company's Chief Commercial Officer and sales lead, has announced his resignation, effective June 30. His departure adds to the instability at the executive level, raising concerns about the company's strategic direction during this critical period.

Intel's attempts to revitalize its foundry business have seen limited success. Although the company has attracted interest for test chips using its upcoming 14A manufacturing process, these developments are still in their infancy and may not lead to immediate financial benefits.

The company's stock performance reflects growing investor skepticism. Despite posting better-than-expected first-quarter earnings, Intel's shares fell by up to 10%, erasing earlier year-to-date gains. This decline is attributed to concerns about global trade policy uncertainties, potential tariffs, and a looming recession, which could further hinder Intel's recovery.

As Intel navigates these challenging circumstances, the path ahead looks fraught with obstacles. The company's efforts to streamline operations and refocus on engineering excellence may not be enough to combat the competitive pressures and internal disruptions it faces. Intel risks falling further behind in the rapidly evolving semiconductor industry without a clear and effective strategy.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

Intel's recent developments highlight the company's ongoing struggles amid leadership changes, financial difficulties

- 1st May, 2025

- LATEST

The announcements from Intel Corporation paint a concerning picture of a tech giant facing internal and external pressures. Despite its efforts to reposition itself within the competitive semiconductor industry, Intel is encountering significant challenges that raise doubts about its future.

In the first quarter of 2025, Intel reported a net loss of $800 million, with revenues stagnating at $12.7 billion. The company's forecast for the second quarter is equally discouraging, projecting revenues between $11.2 billion and $12.4 billion, which fall short of analyst expectations. These numbers underscore Intel's ongoing difficulty regaining its footing in a market increasingly dominated by competitors such as Nvidia and TSMC.

In response to its financial troubles, Intel has implemented substantial cost-cutting measures. The company plans to reduce capital expenditures by 10%, lowering its budget from $20 billion to $18 billion, and has announced potential workforce reductions exceeding 20%. These layoffs follow a previous cut of 15,000 employees in August, indicating a concerning trend of downsizing.

Intel's leadership is also experiencing significant changes. Christoph Schell, the company's Chief Commercial Officer and sales lead, has announced his resignation, effective June 30. His departure adds to the instability at the executive level, raising concerns about the company's strategic direction during this critical period.

Intel's attempts to revitalize its foundry business have seen limited success. Although the company has attracted interest for test chips using its upcoming 14A manufacturing process, these developments are still in their infancy and may not lead to immediate financial benefits.

The company's stock performance reflects growing investor skepticism. Despite posting better-than-expected first-quarter earnings, Intel's shares fell by up to 10%, erasing earlier year-to-date gains. This decline is attributed to concerns about global trade policy uncertainties, potential tariffs, and a looming recession, which could further hinder Intel's recovery.

As Intel navigates these challenging circumstances, the path ahead looks fraught with obstacles. The company's efforts to streamline operations and refocus on engineering excellence may not be enough to combat the competitive pressures and internal disruptions it faces. Intel risks falling further behind in the rapidly evolving semiconductor industry without a clear and effective strategy.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

AI meets DNA: Scientists create custom gene editors with machine learning

- 23rd Apr, 2025

- LATEST

In a remarkable convergence of artificial intelligence and biotechnology, researchers at Mass General Brigham have introduced a groundbreaking method for engineering "bespoke enzymes" specifically designed for gene editing. This innovative approach utilizes machine learning to create enzymes with unprecedented precision, potentially revolutionizing treatments for a wide range of genetic disorders.

The Quest for Precision

Gene editing has long been recognized as a leading frontier in modern medicine, offering the promise of correcting genetic anomalies at their source. However, a significant challenge has always been ensuring specificity—ensuring that edits occur exactly where intended, without any off-target effects. Traditional enzymes used in gene editing, while effective, often lack the level of precision required for such meticulous tasks.

This is where machine learning comes into play. By training algorithms on extensive datasets of enzyme structures and functions, the research team has developed models capable of predicting and designing enzyme variants with improved specificity. These custom-designed enzymes can precisely target genetic sequences, minimizing unintended alterations.

A Symphony of Science and Technology

Dr. Rachel A. Silverstein, the principal investigator of the study, stated, "This is a paradigm shift. By integrating machine learning into enzyme design, we're not just refining existing tools—we're creating entirely new instruments for gene editing."

The implications of this technology are profound. It could lead to more effective and safer treatments for conditions such as cystic fibrosis, sickle cell anemia, and certain types of cancer. Additionally, the adaptability of this approach means it can be customized to fit individual genetic profiles, paving the way for an era of personalized medicine.

Looking Ahead

Although the research is still in its early stages, the results are promising. The team is now focusing on refining the algorithms and conducting preclinical trials to evaluate the efficacy and safety of these bespoke enzymes in living organisms.

Dr. Ben Kleinstiver, a co-author of the study, emphasized the collaborative nature of the project: "This achievement is the culmination of interdisciplinary efforts, bringing together experts in computational biology, genetics, and molecular engineering."

As the lines between biology and technology continue to blur, innovations like this underscore the transformative potential of interdisciplinary research. The intersection of machine learning and gene editing not only showcases scientific ingenuity but also offers hope for countless individuals affected by genetic diseases.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

German-built simulations offer hope for honeybee conservation

- 18th Apr, 2025

- LATEST

Scientists in Germany, funded by the Federal Ministry of Food and Agriculture, have developed a new approach to studying the effects of pesticides on honeybee colonies, providing promising strategies for their protection. By integrating artificial intelligence (AI) with advanced supercomputer modeling, researchers have developed a system that connects the exposure of individual bees to neonicotinoid pesticides with the overall health of their colonies.

The research, published in Environmental Science & Technology, involved exposing honeybees to sublethal doses of neonicotinoids and monitoring their foraging behavior using AI-based camera technology. The collected data was analyzed with BEEHAVE, a supercomputer simulation designed to investigate stress effects on honeybee colony dynamics. The findings revealed that even low levels of pesticide exposure led to decreased efficiency in pollen foraging, both individually and collectively within the colony.

A particularly encouraging aspect of this study is the reproducibility of the results. The team successfully replicated findings from a 2019 field experiment, demonstrating the robustness of their methodology. This consistency is significant, given the inherent variability in honeybee behavior that often complicates the detection of statistically significant effects.

The implications of this research are extensive. By establishing a clear connection between individual bee behavior and colony health, the study provides a valuable tool for assessing the risks associated with pesticide use. This approach could inform more bee-friendly agricultural practices and guide policy decisions to conserve these essential pollinators.

As honeybees play a crucial role in pollinating crops and maintaining biodiversity, developing such predictive models represents a significant advancement. By harnessing the power of AI and simulation, scientists are better equipped to protect honeybee populations and ensure their ongoing contribution to ecosystems and agriculture.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

New supercomputer models show intensifying wildfires in a warming world

- 12th Feb, 2025

- LATEST

Recent research from the Institute for Basic Science in Korea has utilized advanced supercomputer simulations to investigate the impact of climate change on global wildfire patterns. The simulations reveal that rising temperatures and changes in vegetation and humidity are driving an increase in wildfire intensity worldwide. Interestingly, the role of lightning as an ignition source is minimal compared to these environmental changes. This breakthrough enhances our understanding of future wildfire risks, aiding in better prediction and management strategies.

The study's findings indicate a concerning scenario where increasing greenhouse gas emissions are projected to increase global lightning frequency by approximately 1.6% for each degree Celsius of global warming. This increase in lightning activity could heighten wildfire occurrences in regions such as the eastern United States, Kenya, Uganda, and Argentina. However, while lightning contributes to wildfire ignition, the primary factors driving the expanding area burned each year are shifts in global humidity and accelerated vegetation growth, fueling wildfires.

Dr. Vincent Verjans, the study's lead author, warns that global warming has significant effects on ecosystems, infrastructure, and human health. Each degree of warming is estimated to increase the global mean area burned by wildfires annually by 14%. The study identifies regions such as southern and central equatorial Africa, Madagascar, Australia, and parts of the Mediterranean and western North America as the most vulnerable to intensified fires due to climate change.

Furthermore, the study highlights the cascading effects of increased wildfires on air pollution and sunlight penetration. As smoke plumes from wildfires grow, they contribute to regional temperature changes. The authors note that while the new supercomputer model simulations account for the direct aerosol effects of wildfires, further research is needed to fully understand how fires may impact cloud formation and subsequent surface temperatures.

While this study provides crucial insights into the complex interactions between climate change, lightning, and wildfires, it also emphasizes the urgency of addressing key aspects that require deeper examination. For instance, the researchers express concerns about the potential underestimation of future Arctic wildfire risks in current climate models and the implications for aerosol release and air quality.

The study calls for action to confront the growing threat of intensifying wildfires in a warming world and emphasizes the need for comprehensive earth system models to understand better and mitigate the far-reaching impacts of wildfires on our planet.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

Spinning neutron stars and the birth of enormous magnetic fields

- 4th Feb, 2025

- LATEST

UK scientists unravel the mystery behind low-field magnetars

A groundbreaking study led by an international team of researchers has revealed the long-sought mechanism behind the formation of low-field magnetars—neutron stars with incredibly powerful yet comparatively weaker magnetic fields than their highly magnetized counterparts.

The team modeled the magneto-thermal evolution of neutron stars using advanced numerical simulations. Their findings highlight the Tayler-Spruit dynamo, triggered by the fallback of supernova material, as a crucial factor in the development of these magnetic fields. This discovery resolves a decade-old puzzle that has intrigued astrophysicists since identifying low-field magnetars in 2010.

Decoding the magnetic marvels of the Universe

Dr. Andrei Igoshev, the lead author and a research fellow at Newcastle University's School of Mathematics, Statistics, and Physics, emphasized the significance of this discovery:

"Neutron stars are born from supernova explosions. While most of the outer layers of a massive star are expelled during the explosion, some material falls back onto the newly formed neutron star, causing it to spin faster. Our study demonstrates that this process is fundamental in generating magnetic fields through the Tayler-Spruit dynamo mechanism. Although this mechanism was theorized nearly 25 years ago, we have only now been able to reproduce it using computer simulations. The magnetic field generated this way is incredibly complex, with an internal field much stronger than we observe externally."

Magnetars are known for their astonishingly strong magnetic fields, which can be hundreds of trillions more potent than Earth's. These intense fields make magnetars some of the brightest and most variable X-ray radiation sources in the Universe. Surprisingly, some neutron stars with significantly weaker magnetic fields exhibit similar X-ray emissions, classifying them as low-field magnetars. This study provides the most apparent evidence that a dynamo process—where the movement of plasma generates magnetic fields—can explain their formation.

Supercomputer simulations and the future of neutron star research

This discovery not only answers a long-standing question in astrophysics but also paves the way for future research into the intricate nature of neutron star magnetism. Dr. Igoshev is leading the establishment of a new research group at Newcastle University dedicated to further exploring these fascinating cosmic objects. By harnessing the power of supercomputer simulations, his team aims to investigate the hidden mechanisms that govern the life cycles of neutron stars.

"The universe is full of mysteries waiting to be unraveled," Dr. Igoshev noted. "With advanced simulations and innovative research, we are one step closer to understanding the incredible forces shaping the cosmos."

This breakthrough highlights the power of collaboration and cutting-edge technology in unveiling the secrets of the Universe, inspiring the next generation of scientists to push the boundaries of astrophysical exploration.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

Spinning neutron stars and the birth of enormous magnetic fields

- 4th Feb, 2025

- LATEST

UK scientists unravel the mystery behind low-field magnetars

A groundbreaking study led by an international team of researchers has revealed the long-sought mechanism behind the formation of low-field magnetars—neutron stars with incredibly powerful yet comparatively weaker magnetic fields than their highly magnetized counterparts.

The team modeled the magneto-thermal evolution of neutron stars using advanced numerical simulations. Their findings highlight the Tayler-Spruit dynamo, triggered by the fallback of supernova material, as a crucial factor in the development of these magnetic fields. This discovery resolves a decade-old puzzle that has intrigued astrophysicists since identifying low-field magnetars in 2010.

Decoding the magnetic marvels of the Universe

Dr. Andrei Igoshev, the lead author and a research fellow at Newcastle University's School of Mathematics, Statistics, and Physics, emphasized the significance of this discovery:

"Neutron stars are born from supernova explosions. While most of the outer layers of a massive star are expelled during the explosion, some material falls back onto the newly formed neutron star, causing it to spin faster. Our study demonstrates that this process is fundamental in generating magnetic fields through the Tayler-Spruit dynamo mechanism. Although this mechanism was theorized nearly 25 years ago, we have only now been able to reproduce it using computer simulations. The magnetic field generated this way is incredibly complex, with an internal field much stronger than we observe externally."

Magnetars are known for their astonishingly strong magnetic fields, which can be hundreds of trillions more potent than Earth's. These intense fields make magnetars some of the brightest and most variable X-ray radiation sources in the Universe. Surprisingly, some neutron stars with significantly weaker magnetic fields exhibit similar X-ray emissions, classifying them as low-field magnetars. This study provides the most apparent evidence that a dynamo process—where the movement of plasma generates magnetic fields—can explain their formation.

Supercomputer simulations and the future of neutron star research

This discovery not only answers a long-standing question in astrophysics but also paves the way for future research into the intricate nature of neutron star magnetism. Dr. Igoshev is leading the establishment of a new research group at Newcastle University dedicated to further exploring these fascinating cosmic objects. By harnessing the power of supercomputer simulations, his team aims to investigate the hidden mechanisms that govern the life cycles of neutron stars.

"The universe is full of mysteries waiting to be unraveled," Dr. Igoshev noted. "With advanced simulations and innovative research, we are one step closer to understanding the incredible forces shaping the cosmos."

This breakthrough highlights the power of collaboration and cutting-edge technology in unveiling the secrets of the Universe, inspiring the next generation of scientists to push the boundaries of astrophysical exploration.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

EXTOLL partners with BeammWave, GlobalFoundries to develop next-gen high-speed ASICs

- 31st Jan, 2025

- LATEST

EXTOLL, a leader in high-speed, ultra-low-power SerDes and chiplet connectivity, has been selected as a key SerDes IP partner by BeammWave for its upcoming communication ASIC development. This collaboration utilizes GlobalFoundries' (GF) 22nm FD-SOI process technology, 22FDX, to create advanced solutions for mmWave 5G/6G digital beamforming.

The partnership underscores EXTOLL's expertise in ultra-low-power design, ensuring energy-efficient, high-performance chiplet-based solutions to meet the increasing demand for multi-lane connectivity. EXTOLL's SerDes IP core supports data transmission speeds of up to 32 Gbps per lane and is compatible with GF's 22FDX, 12LP, and 12LP+ platforms.

Advancing 5G/6G with energy-efficient solutions

Dirk Wieberneit, CEO of EXTOLL, highlighted the importance of this collaboration, stating, "This partnership showcases EXTOLL's strength in ultra-low-power design, particularly on GF's 22FDX process technology, enabling future communication innovations. We are honored to partner with BeammWave in shaping the future of digital beamforming technology."

GlobalFoundries' 22FDX technology is renowned for superior RF/mixed-signal performance, power efficiency, and system-on-chip (SoC) integration. It provides an ideal foundation for next-generation beamforming applications, where power efficiency and high-speed connectivity are crucial.

Industry leaders applaud the collaboration

Per-Olof Brandt, BeammWave's Chief Technology Officer, expressed enthusiasm for the partnership: "We are thrilled to collaborate with EXTOLL on their industry-leading Very Short Reach SerDes technology in 22nm. Their ongoing innovation in this area allows us to deliver best-in-class power and performance for our ambitious mmWave 5G/6G products."

Ziv Hammer, Senior Vice President of Design Platforms and Services at GlobalFoundries, emphasized the growing market demand for 22FDX technology and the impact of EXTOLL's innovations: "As demand for beamforming applications increases, we are excited to see our IP partner EXTOLL continue to innovate on this technology node. Their ultra-low-power interconnect solutions enable next-generation chiplet technologies, reinforcing our commitment to supporting global connectivity."

Shaping the future of high-speed connectivity

As the demand for high-speed, energy-efficient connectivity grows, the collaboration between EXTOLL, BeammWave, and GlobalFoundries sets a new benchmark in 5G/6G digital beamforming technology. Combining GF's speed technology, EXTOLL's advanced SerDes IP, and BeammWave's expertise in mmWave innovations, this partnership aims to drive the future of ultra-fast, energy-efficient communications.

Post is under moderationStream item published successfully. Item will now be visible on your stream. -

EXTOLL partners with BeammWave, GlobalFoundries to develop next-gen high-speed ASICs

- 31st Jan, 2025

- LATEST

EXTOLL, a leader in high-speed, ultra-low-power SerDes and chiplet connectivity, has been selected as a key SerDes IP partner by BeammWave for its upcoming communication ASIC development. This collaboration utilizes GlobalFoundries' (GF) 22nm FD-SOI process technology, 22FDX, to create advanced solutions for mmWave 5G/6G digital beamforming.

The partnership underscores EXTOLL's expertise in ultra-low-power design, ensuring energy-efficient, high-performance chiplet-based solutions to meet the increasing demand for multi-lane connectivity. EXTOLL's SerDes IP core supports data transmission speeds of up to 32 Gbps per lane and is compatible with GF's 22FDX, 12LP, and 12LP+ platforms.

Advancing 5G/6G with energy-efficient solutions

Dirk Wieberneit, CEO of EXTOLL, highlighted the importance of this collaboration, stating, "This partnership showcases EXTOLL's strength in ultra-low-power design, particularly on GF's 22FDX process technology, enabling future communication innovations. We are honored to partner with BeammWave in shaping the future of digital beamforming technology."

GlobalFoundries' 22FDX technology is renowned for superior RF/mixed-signal performance, power efficiency, and system-on-chip (SoC) integration. It provides an ideal foundation for next-generation beamforming applications, where power efficiency and high-speed connectivity are crucial.

Industry leaders applaud the collaboration

Per-Olof Brandt, BeammWave's Chief Technology Officer, expressed enthusiasm for the partnership: "We are thrilled to collaborate with EXTOLL on their industry-leading Very Short Reach SerDes technology in 22nm. Their ongoing innovation in this area allows us to deliver best-in-class power and performance for our ambitious mmWave 5G/6G products."

Ziv Hammer, Senior Vice President of Design Platforms and Services at GlobalFoundries, emphasized the growing market demand for 22FDX technology and the impact of EXTOLL's innovations: "As demand for beamforming applications increases, we are excited to see our IP partner EXTOLL continue to innovate on this technology node. Their ultra-low-power interconnect solutions enable next-generation chiplet technologies, reinforcing our commitment to supporting global connectivity."

Shaping the future of high-speed connectivity

As the demand for high-speed, energy-efficient connectivity grows, the collaboration between EXTOLL, BeammWave, and GlobalFoundries sets a new benchmark in 5G/6G digital beamforming technology. Combining GF's speed technology, EXTOLL's advanced SerDes IP, and BeammWave's expertise in mmWave innovations, this partnership aims to drive the future of ultra-fast, energy-efficient communications.

Post is under moderationStream item published successfully. Item will now be visible on your stream.

Pinned Items

Recent Activities

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

-

Stream item published successfully. Item will now be visible on your stream.

There are no activities here yet