ACADEMIA

QuTech takes important step in quantum supercomputing with error correction

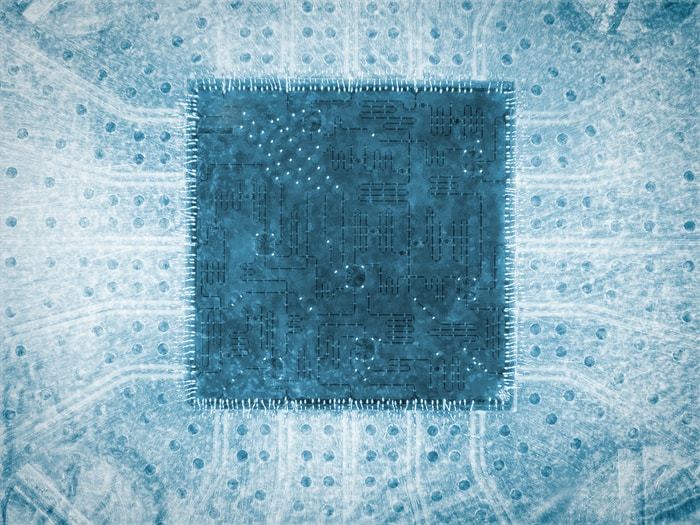

Researchers at QuTech, a collaboration between the TU Delft, the oldest and largest Dutch public technical university, and TNO, Netherlands Organisation for Applied Scientific Research, have reached a milestone in quantum error correction. They have integrated high-fidelity operations on encoded quantum data with a scalable scheme for repeated data stabilization.

Physical quantum bits, or qubits, are vulnerable to errors. These errors arise from various sources, including quantum decoherence, crosstalk, and imperfect calibration. Fortunately, the theory of quantum error correction stipulates the possibility to compute while synchronously protecting quantum data from such errors.

“Two capabilities will distinguish an error-corrected quantum computer from present-day noisy intermediate-scale quantum (NISQ) processors”, says Prof Leonardo DiCarlo of QuTech. “First, it will process quantum information encoded in logical qubits rather than in physical qubits (each logical qubit consisting of many physical qubits). Second, it will use quantum parity checks interleaved with computation steps to identify and correct errors occurring in the physical qubits, safeguarding the encoded information as it is being processed.” According to theory, the logical error rate can be exponentially suppressed provided that the incidence of physical errors is below a threshold and the circuits for logical operations and stabilization are fault-tolerant.

All the operations

The basic idea thus is that if you increase the redundancy and use more and more qubits to encode data, the net error goes down. The researchers at TU Delft, together with colleagues at TNO, have now realized a major step toward this goal, realizing a logical qubit consisting of seven physical qubits (superconducting transmons). “We show that we can do all the operations required for computation with the encoded information. This integration of high-fidelity logical operations with a scalable scheme for repeated stabilization is a key step in quantum error correction”, says Prof Barbara Terhal, also of QuTech.

First-author and Ph.D. candidate Jorge Marques further explains: “Until now researchers have encoded and stabilized. We now show that we can compute as well. This is what a fault-tolerant computer must ultimately do: process and protect data from errors all at the same time. We do three types of logical-qubit operations: initializing the logical qubit in any state, transforming it with gates, and measuring it. We show that all operations can be done directly on encoded information. For each type, we observe higher performance for fault-tolerant variants over non-fault-tolerant variants.” Fault-tolerant operations are key to reducing the build-up of physical-qubit errors into logical-qubit errors.

Long term

DiCarlo emphasizes the multidisciplinary nature of the work: “This is a combined effort of experimental physics, theoretical physics from Barbara Terhal’s group, and also electronics developed with TNO and external collaborators. The project is mainly funded by IARPA and Intel Corporation.”

“Our grand goal is to show that as we increase encoding redundancy, the net error rate decreases exponentially”, DiCarlo concludes. “Our current focus is on 17 physical qubits and next up will be 49. All layers of our quantum computer’s architecture were designed to allow this scaling.”